k8s-minio-st

Kubernetes MinIO Standalone Tenant

Template version:v25-10-30

MinIO image version:quay.io/minio/minio:RELEASE.2025-09-07T16-13-09Z

MinIO is a Kubernetes-native high performance object store with an S3-compatible API. The MinIO Kubernetes Operator supports deploying MinIO Tenants onto private and public cloud infrastructures ("Hybrid" Cloud).

This service creates a X-node MinIO Standalone Tenant using MinIO for object storage.

Template override parameters

File _values-tpl.yaml contains template configuration parameters and their default values:

## _values-tpl.yaml## cskygen template default values file#_tplname: k8s-minio-st_tpldescription: Kubernetes MinIO Standalone Tenant_tplversion: 25-10-30## Values to override### k8s cluster credentials kubeconfig filekubeconfig: config-k8s-modnamespace:## k8s namespace namename: minio-stpublishing:## External api url used by mcminiourl: minio-st.cskylab.net## External api url used by consoleconsoleurl: minio-st-console.cskylab.netcredentials:# MinIO root user credentialsminio_accesskey: "admin"minio_secretkey: "NoFear21"certificate:## Cert-manager clusterissuerclusterissuer: ca-test-internalregistry:## Proxy Repository for Dockerproxy: harbor.cskylab.net/dockerhublocalpvnodes: # (k8s node names)all_pv: k8s-mod-n1# k8s nodes domain namedomain: cskylab.net# k8s nodes local administratorlocaladminusername: koslocalrsyncnodes: # (k8s node names)all_pv: k8s-mod-n2# k8s nodes domain namedomain: cskylab.net# k8s nodes local administratorlocaladminusername: kos

TL;DR

Prepare LVM Data services for PV's:

Install namespace and charts:

# Install./csdeploy.sh -m install# Check status./csdeploy.sh -l

Access:

- Console URL:

{{ .publishing.consoleurl }} - MinIO URL:

{{ .publishing.miniourl }} - AccessKey:

{{ .credentials.minio_accesskey }} - SecretKey:

{{ .credentials.minio_secretkey }}

Prerequisites

- MinIO Operator must be installed in K8s Cluster.

- Administrative access to Kubernetes cluster.

LVM Data Services

Data services are supported by the following nodes:

| Data service | Kubernetes PV node | Kubernetes RSync node |

|---|---|---|

/srv/{{ .namespace.name }} | {{ .localpvnodes.all_pv }} | {{ .localrsyncnodes.all_pv }} |

PV node is the node that supports the data service in normal operation.

RSync node is the node that receives data service copies synchronized by cron-jobs for HA.

To create the corresponding LVM data services, execute from your mcc management machine the following commands:

## Create LVM data services in PV node#echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localpvnodes.localadminusername }}@{{ .localpvnodes.all_pv }}.{{ .localpvnodes.domain }} \'sudo cs-lvmserv.sh -m create -qd "/srv/{{ .namespace.name }}" \&& mkdir "/srv/{{ .namespace.name }}/data/minio-st"' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

## Create LVM data services in RSync node#echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localrsyncnodes.localadminusername }}@{{ .localrsyncnodes.all_pv }}.{{ .localrsyncnodes.domain }} \'sudo cs-lvmserv.sh -m create -qd "/srv/{{ .namespace.name }}" \&& mkdir "/srv/{{ .namespace.name }}/data/minio-st"' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

To delete the corresponding LVM data services, execute from your mcc management machine the following commands:

## Delete LVM data services in PV node#echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localpvnodes.localadminusername }}@{{ .localpvnodes.all_pv }}.{{ .localpvnodes.domain }} \'sudo cs-lvmserv.sh -m delete -qd "/srv/{{ .namespace.name }}"' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

## Delete LVM data services in RSync node#echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localrsyncnodes.localadminusername }}@{{ .localrsyncnodes.all_pv }}.{{ .localrsyncnodes.domain }} \'sudo cs-lvmserv.sh -m delete -qd "/srv/{{ .namespace.name }}"' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

Persistent Volumes

Review values in all Persistent volume manifests with the name format ./pv-*.yaml.

The following PersistentVolume & StorageClass manifests are applied:

# PV manifestspv-minio.yaml

The node assigned in nodeAffinity section of the PV manifest, will be used when scheduling the pod that holds the service.

How-to guides

Install

Check versions and update if necesary, for the following image in mod-tenant.yaml file:

## Registry location and Tag to download MinIO Server imageimage: quay.io/minio/minio:RELEASE.YYY-MM-DDTHH-MM-SSZ

To check for the latest image version see:

To create namespace and install MinIO tenant:

# Install namespace and tenant./csdeploy.sh -m install

Update

Check image versions and update if necesary.

To reapply manifests and upgrade images:

# Reapply manifests and update images./csdeploy.sh -m update

Delete

WARNING: This action will delete MinIO tenant. All MinIO Storage Data in K8s Cluster will be erased.

To delete MinIO tenant, remove namespace and PV's run:

# Delete tenant, PV's and namespace./csdeploy.sh -m delete

Remove

This option is intended to be used only to remove the namespace when tenant deployment is failed. Otherwise, you must run ./csdeploy.sh -m delete.

To remove PV's, namespace and all its contents run:

# Remove PV's namespace and all its contents./csdeploy.sh -m remove

Display status

To display namespace, persistence and tenant status run:

# Display namespace, persistence and tenant status./csdeploy.sh -l

Backup & data protection

Backup & data protection must be configured on file cs-cron_scripts of the node that supports the data services.

RSync HA copies

Rsync cronjobs are used to achieve service HA for LVM data services that supports the persistent volumes. The script cs-rsync.sh perform the following actions:

- Take a snapshot of LVM data service in the node that supports the service (PV node)

- Copy and syncrhonize the data to the mirrored data service in the kubernetes node designed for HA (RSync node)

- Remove snapshot in LVM data service

To perform RSync manual copies on demand, execute from your mcc management machine the following commands:

Warning: You should not make two copies at the same time. You must check the scheduled jobs in

cs-cron-scriptsand disable them if necesary, in order to avoid conflicts.

## RSync data services#echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localpvnodes.localadminusername }}@{{ .localpvnodes.all_pv }}.{{ .localpvnodes.domain }} \'sudo cs-rsync.sh -q -m rsync-to -d /srv/{{ .namespace.name }} \-t {{ .localrsyncnodes.all_pv }}.{{ .namespace.domain }}' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

RSync cronjobs:

The following cron jobs should be added to file cs-cron-scripts on the node that supports the service (PV node). Change time schedule as needed:

################################################################################# /srv/{{ .namespace.name }} - RSync LVM data services#################################################################################### RSync path: /srv/{{ .namespace.name }}## To Node: {{ .localrsyncnodes.all_pv }}## At minute 0 past every hour from 8 through 23.# 0 8-23 * * * root run-one cs-lvmserv.sh -q -m snap-remove -d /srv/{{ .namespace.name }} >> /var/log/cs-rsync.log 2>&1 ; run-one cs-rsync.sh -q -m rsync-to -d /srv/{{ .namespace.name }} -t {{ .localrsyncnodes.all_pv }}.{{ .namespace.domain }} >> /var/log/cs-rsync.log 2>&1

Restic backup

Restic can be configured to perform data backups to local USB disks, remote disk via sftp or cloud S3 storage.

To perform on-demand restic backups execute from your mcc management machine the following commands:

Warning: You should not launch two backups at the same time. You must check the scheduled jobs in

cs-cron-scriptsand disable them if necesary, in order to avoid conflicts.

## Restic backup data services#echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localpvnodes.localadminusername }}@{{ .localpvnodes.all_pv }}.{{ .localpvnodes.domain }} \'sudo cs-restic.sh -q -m restic-bck -d /srv/{{ .namespace.name }} -t {{ .namespace.name }}' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

To view available backups:

echo \&& echo "******** START of snippet execution ********" \&& echo \&& ssh {{ .localpvnodes.localadminusername }}@{{ .localpvnodes.all_pv }}.{{ .localpvnodes.domain }} \'sudo cs-restic.sh -q -m restic-list -t {{ .namespace.name }}' \&& echo \&& echo "******** END of snippet execution ********" \&& echo

Restic cronjobs:

The following cron jobs should be added to file cs-cron-scripts on the node that supports the service (PV node). Change time schedule as needed:

################################################################################# /srv/{{ .namespace.name }}- Restic backups#################################################################################### Data service: /srv/{{ .namespace.name }}## At minute 30 past every hour from 8 through 23.# 30 8-23 * * * root run-one cs-lvmserv.sh -q -m snap-remove -d /srv/{{ .namespace.name }} >> /var/log/cs-restic.log 2>&1 ; run-one cs-restic.sh -q -m restic-bck -d /srv/{{ .namespace.name }} -t {{ .namespace.name }} >> /var/log/cs-restic.log 2>&1 && run-one cs-restic.sh -q -m restic-forget -t {{ .namespace.name }} -f "--keep-hourly 6 --keep-daily 31 --keep-weekly 5 --keep-monthly 13 --keep-yearly 10" >> /var/log/cs-restic.log 2>&1

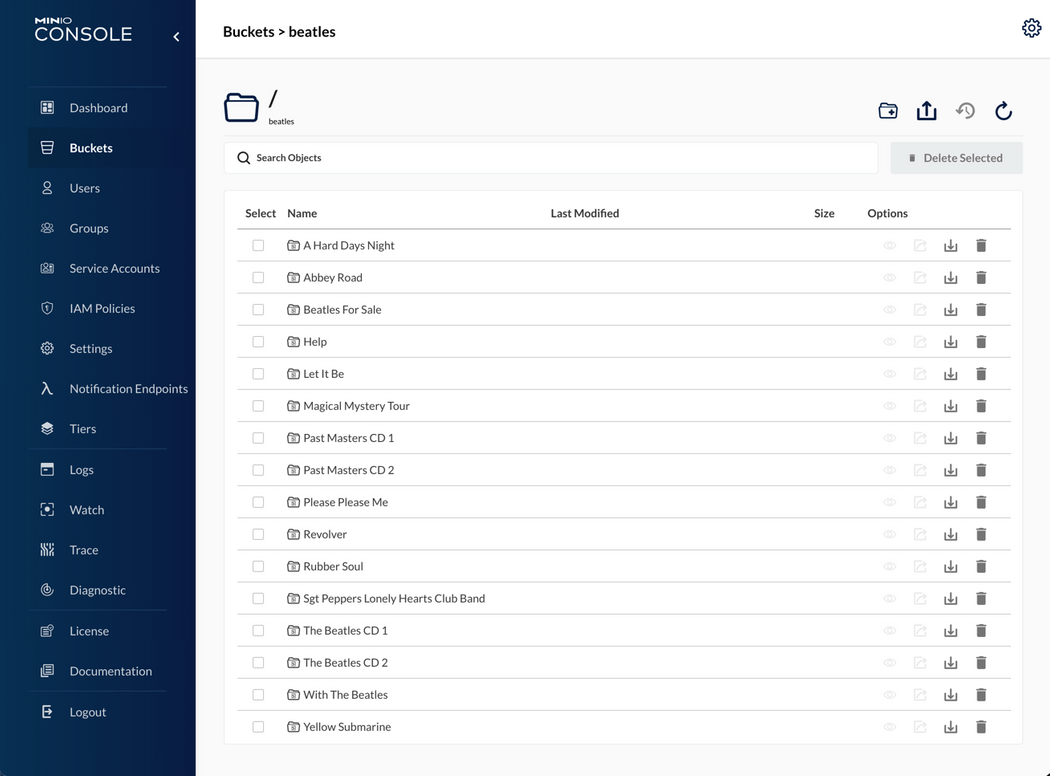

Bucket maintenance

Buckets can be created together with users and policies for ReadWrite, ReadOnly and WriteOnly access.

A record file in configuration management ./buckets folder will be created for each bucket in the form bucket_name.config.

Additionally, a source environment file for MinIO bucket access and restic operations will be created in the form source-bucket_name.sh. This file can be used for restic backups operations with the script csrestic-minio.sh. It can be sourced also from a management console to initialize the variables needed to access bucket through MinIO client mc and restic repository through restic commands.

Create bucket, users and policies

To create bucket, users and policies:

# Create Bucket & Users & Policies./csbucket.sh -c mybucket

In this case a file named ./buckets/mybucket.config will be created with the access and secret keys used for the following users:

- mybucket_rw (ReadWrite user)

- mybucket_ro (ReadOnly user)

- mybucket_wo (Write only user)

You can use these keys for specific access to the bucket from any application or user.

Delete bucket, users and policies

To delete bucket, users and policies:

# Delete Bucket & Users & Policies./csbucket.sh -d mybucket

File ./buckets/mybucket.config will also be deleted with access and secret keys.

Display bucket, users and policies

To list current bucket, users and policies:

# List Bucket & Users & Policies./csbucket.sh -l

MinIO Client

Console utility

To acces MinIO console throug web utility:

- Console URL:

{{ .publishing.consoleurl }} - AccessKey:

{{ .credentials.console_accesskey }} - SecretKey:

{{ .credentials.console_secretkey }}

Command line utility

If you have minio client installed, you can access mc command line utiliy from the command line.

File .envrc export automatically through "direnv" the environment variable needed to operate mc with minio as hostname from its directory in git repository:

# MinIO host environment variableexport MC_HOST_minio="https://{{ .credentials.minio_accesskey }}:{{ .credentials.minio_secretkey }}@{{ .publishing.miniourl }}"

You can run mc commands to operate from console with buckets and files: Ex mc tree minio.

For more information: https://docs.min.io/docs/minio-client-complete-guide.html

Reference

To learn more see:

Scripts

cs-deploy

Purpose:Kubernetes MinIO Standalone Tenant.Usage:sudo csdeploy.sh [-l] [-m <execution_mode>] [-h] [-q]Execution modes:-l [list-status] - List current status.-m <execution_mode> - Valid modes are:[pull-charts] - Pull charts to './charts/' directory.[install] - Create namespace, secrets, config-maps, PV's,apply manifests and install charts.[update] - Reapply manifests and update or upgrade charts.[uninstall] - Uninstall charts, delete manifests, remove PV's and namespace.[remove] - Remove PV's, namespace and all its contents.Options and arguments:-h Help-q Quiet (Nonstop) execution.Examples:# Create namespace, secrets, config-maps, PV's, apply manifests and install charts../csdeploy.sh -m install# Reapply manifests and update or upgrade charts../csdeploy.sh -m update# Uninstall charts, delete manifests, remove PV's and namespace../csdeploy.sh -m uninstall# Remove PV's, namespace and all its contents./csdeploy.sh -m remove# Display namespace, persistence and charts status:./csdeploy.sh -l

csbucket

Purpose:Minio Bucket & User & Policy maintenance.Use this script to create or delete together a bucketwith readwrite, readonly and writeonly users and access policies.Usage:sudo csdeploy.sh [-l] [-c <bucket_name>] [-d <bucket_name>] [-h] [-q]Execution modes:-l [list-status] - List Buckets & Users & Policies.-c <bucket_name> - Create Bucket & Users & Policies-d <bucket_name> - Remove Bucket & Users & PoliciesOptions and arguments:-h Help-q Quiet (Nonstop) execution.Examples:# Create Bucket & Users & Policies./csbucket.sh -c mybucket# Delete Bucket & Users & Policies./csbucket.sh -d mybucket

License

Copyright © 2025 cSkyLab.com ™

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.